Introduction

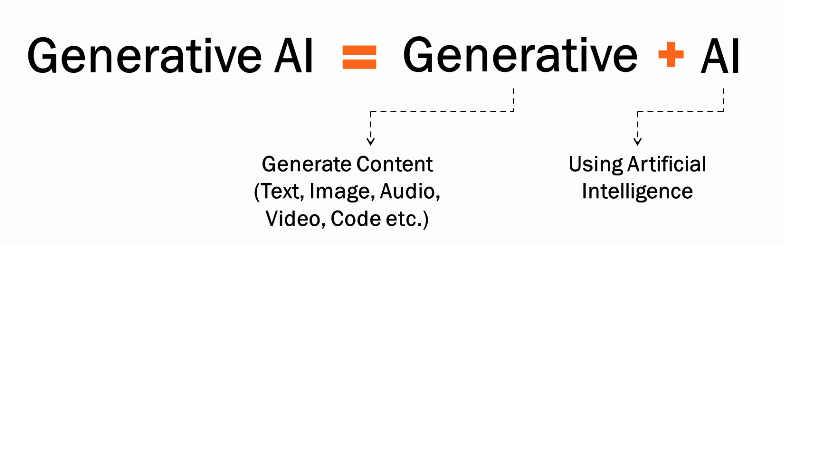

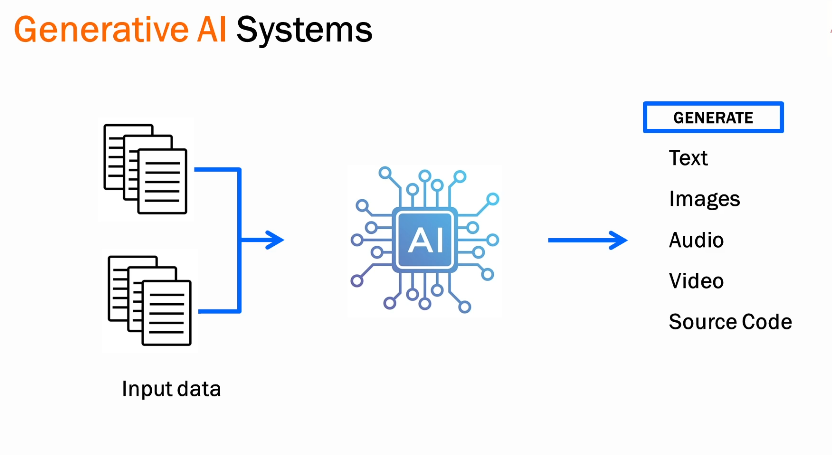

Gen AI is a type of AI that can create new content such as images, audio,video,code etc .

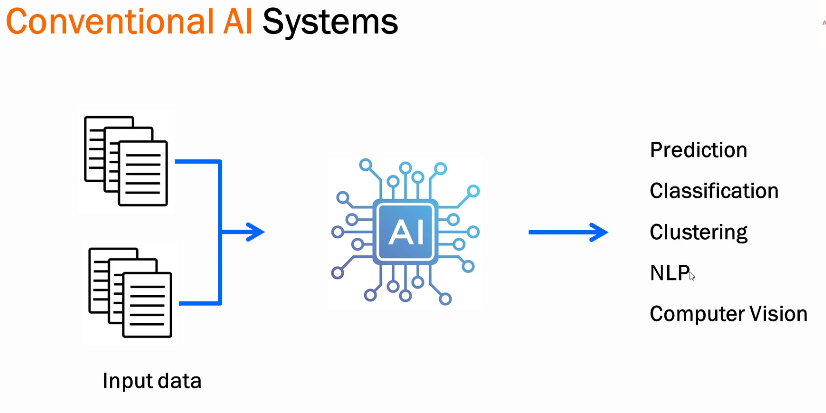

Conventional AI, also known as "narrow AI," refers to AI systems that are designed and trained to perform specific tasks.

- Recommendation systems like Netflix uses AI to suggest movies and TV shows based on a user's viewing history.

- Search Engines like Google Search uses AI to rank search results based on relevance and user intent.

Generating a new content using artificial intelligence is Gen AI capabilities and the content can be anything.

What does Artificial Intelligence mean?

Everything we (humans) do, whether it's science, arts, or sports, relies on the human brain, the most intelligent entity on Earth. This is real intelligence. When we intend to replicate this intelligence into a machine—trying to make a machine as smart and intelligent as a human being—that's called artificial intelligence or AI.

So, artificial intelligence is the development of machines that can perform tasks that typically require human intelligence. For example:

- Medical systems: Diagnosing diseases from X-rays.

- Property prediction: Predicting property prices.

- Fraud detection: Detecting credit card fraud.

All of these are tasks that humans have traditionally performed using their inherent intelligence. But we can now enable machines to do the same, and that's the essence of artificial intelligence or AI.

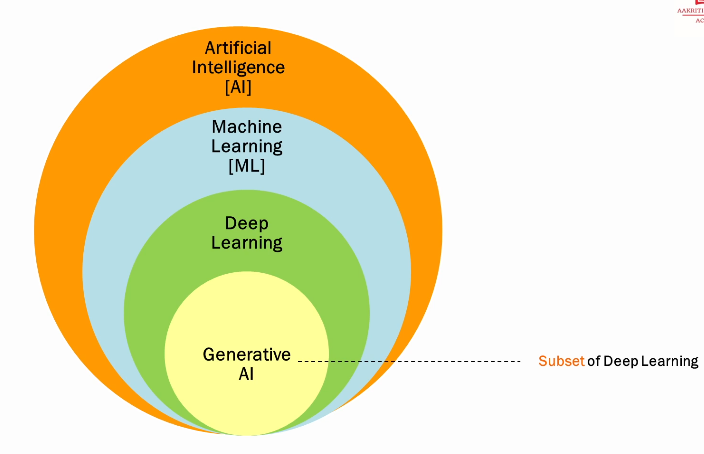

Artificial intelligence is a broad field of computer science that focuses on creating intelligent systems capable of performing tasks that typically require human intelligence

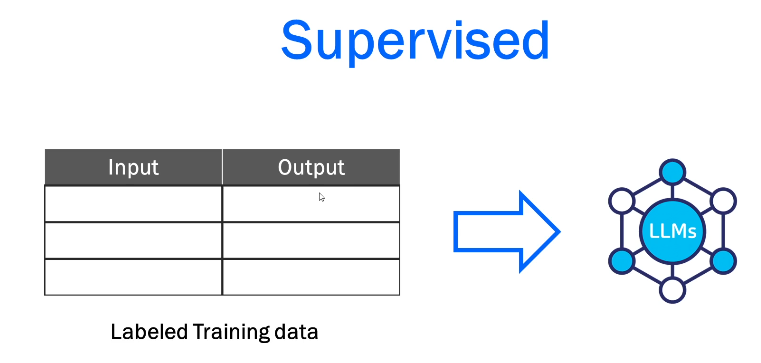

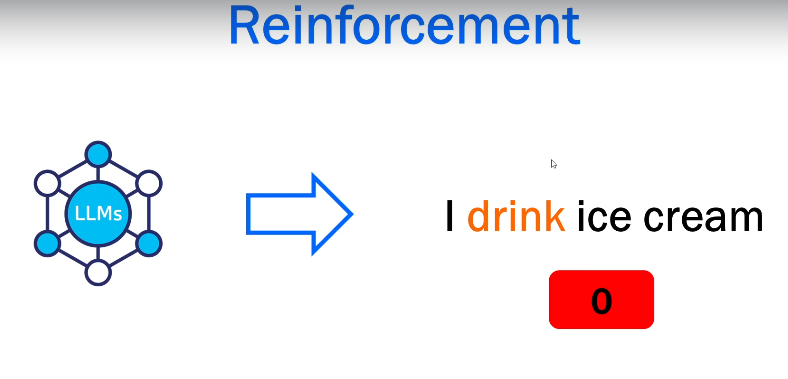

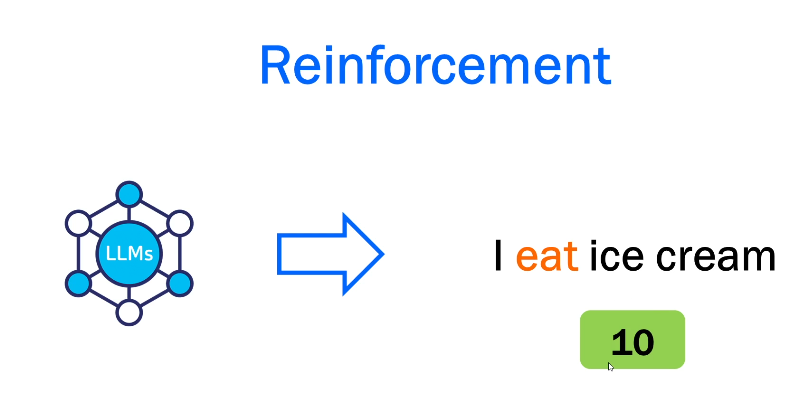

Machine learning is a subset of that focuses on the development of algorithms and models that enable computer and make predictions all decisions without explicit programming

Machine Learning: Teaching Machines to Learn

As the name implies, machine learning focuses on enabling machines to acquire knowledge and skills. The core objective is to train these machines to make predictions and decisions autonomously, without explicit programming from humans.

Essentially, we provide a machine with data, it learns from that data, and then it becomes capable of making predictions or taking actions independently, without further human intervention.

The Neural Network Within Us

Our bodies possess intricate networks of neurons, interconnected like a complex web. Imagine, for instance, seeing an object. Light enters your eyes, and neurons begin transmitting information, cascading from one to the next. This signal eventually reaches your brain, where the object is analyzed. Your brain then identifies it: "That's an apple," or "That's a cat."

The crucial takeaway is that these neurons function in multiple layers, each connected to the next. They process information, refine it, and then transmit it to the subsequent stage. This layered architecture, with each stage processing and enhancing information before passing it on, is fundamental to human intelligence.

Replicating Human Intelligence: Neural Networks

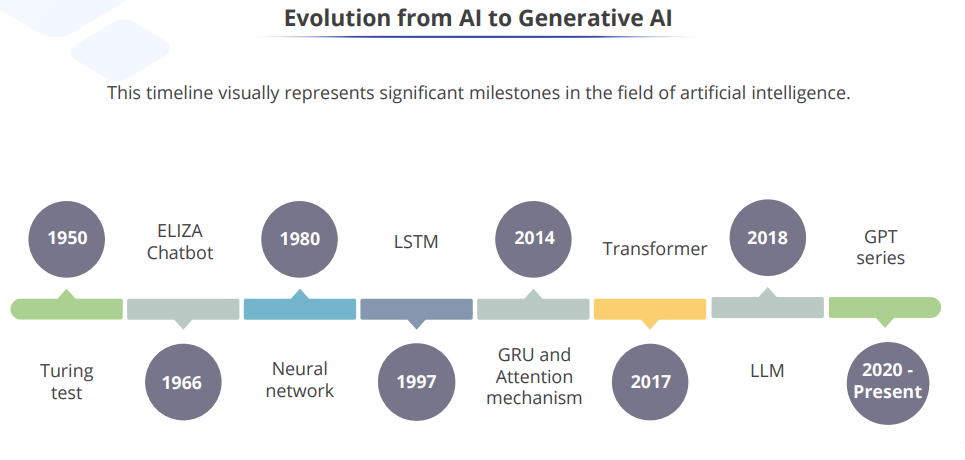

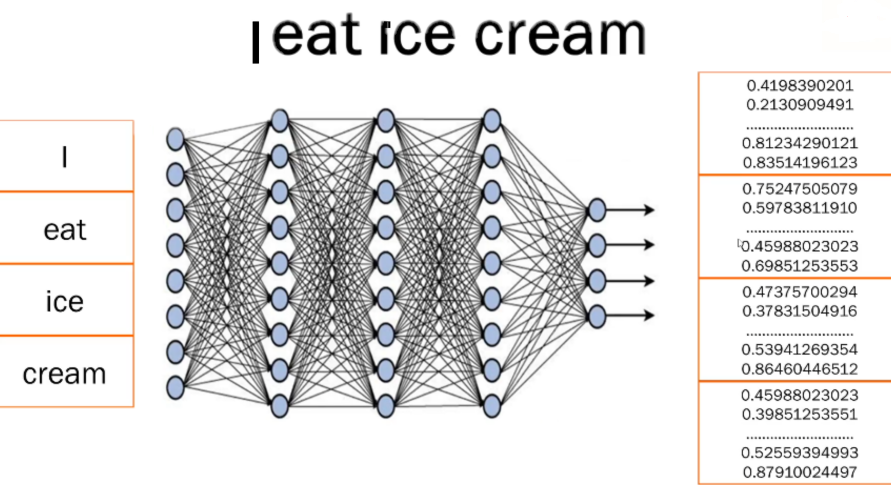

Engineers, recognizing the power of the human brain's layered neural structure, sought to replicate it in machines. They applied this concept to machine learning, giving rise to neural networks.

As you can see, neural networks consist of multiple computational layers. Each layer receives information from the preceding layer, analyzes it, and learns from it. This layered processing significantly enhances the ability to predict and improve overall output accuracy.

Neural networks are the foundation for generative tools like ChatGPT, which we'll explore in detail in later modules. The key takeaway is that for complex tasks like text and image generation, deep learning models like neural networks outperform older machine learning approaches. This superiority stems from their multilayer structure, which allows for extensive data analysis and processing.

Historically, the computational power required to train neural networks was a limiting factor. However, advancements have made them significantly cheaper to train and use, driving their widespread adoption, especially in generative AI applications.

Deep learning is a subset of machine learning that focuses on teaching computers to learn and make decision by processing data through neural network inspired by the human brain.

Machines need to be trained on large volume of data.Garbage in, garbage out (GIGO) means that the quality of the output of a process is determined by the quality of the input.

We need high computational power

Shift to conversational, contextual understanding

ChatGPT is a language model developed by OpenAI. It is designed for natural language understanding and generation, specifically in a conversational context.

Key terminologies

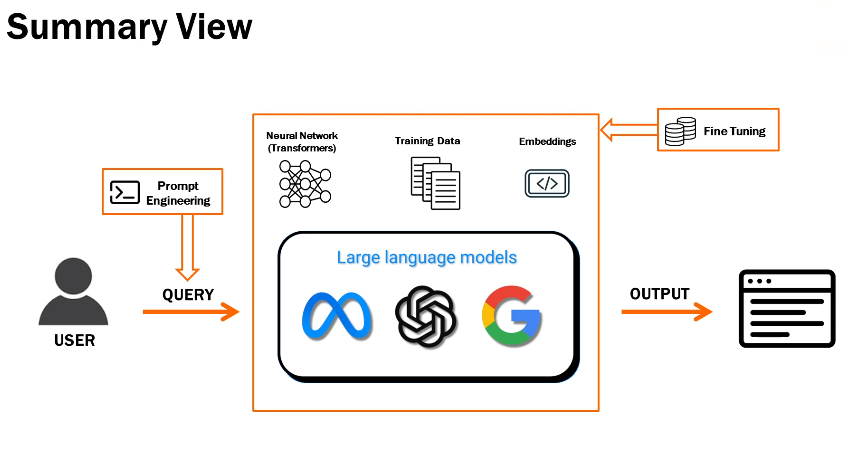

Large Language Models (LLMs) are powerful artificial intelligence models designed for understanding and generating human-like text.

A Large Language Model is a sophisticated mathematical function that predicts what word comes next for any piece of text.

Key Points on LLMs

- Pre-training - Trained on huge corpus of data

- Size and scale - Massive Neural Networks

- Fine-Tuning - More "Targeted" training for specific tasks

Use Cases for LLM:

Content generation : Marketing, advertising.....

Chatbots and virtual assistants : User support, interactions...

Language translation : expand communication

Text summarization : reduce lengthy content

Q&A : answer questions, provide information

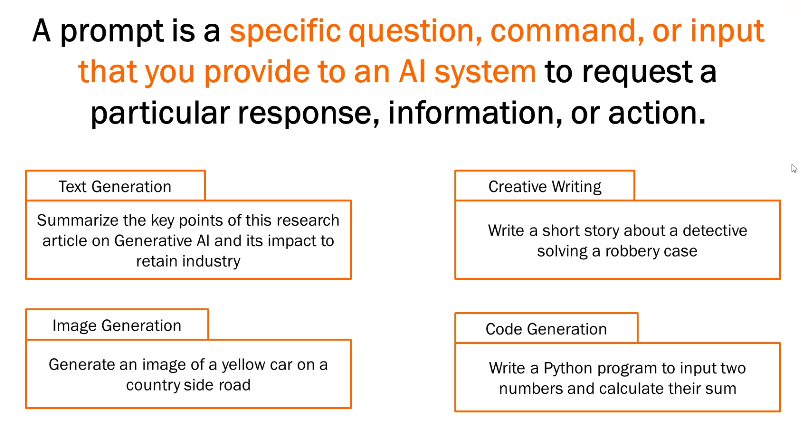

Prompt Engineering

Prompt engineering is the process of crafting well-defined and structured input queries to interact with AI systems, in order to get accurate and relevant responses.

Best Practices for Prompt Engineering

Clearly convey the desired response. Example: Instead of saying "Tell me about the weather," say "What is the temperature and forecast for tomorrow in London?"

Provide context or background information. Example: Instead of asking "What is the capital?", say "I'm researching European geography. What is the capital of France?"

Balance simplicity and complexity. Example: For a quick fact, "What is the boiling point of water?" is fine. For a complex task, "Write a 500-word summary of the key arguments in the provided research paper" is needed.

Iterative testing and refinement Example: If the first prompt "Write a short story" produces a vague result, refine it to "Write a short science fiction story about a robot on Mars, with a focus on suspense."

Embeddings

Machine DO NOT understand text.They only understand numbers

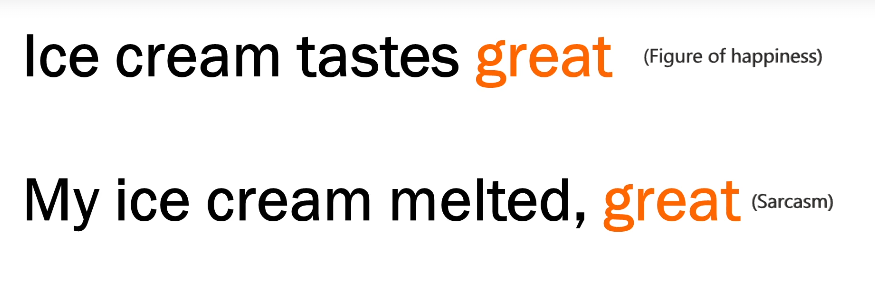

Embeddings in AI Embeddings are numerical representation of text. They are essential for AI models to understand and work with human language effectively.

Below are two same sentence but different context.

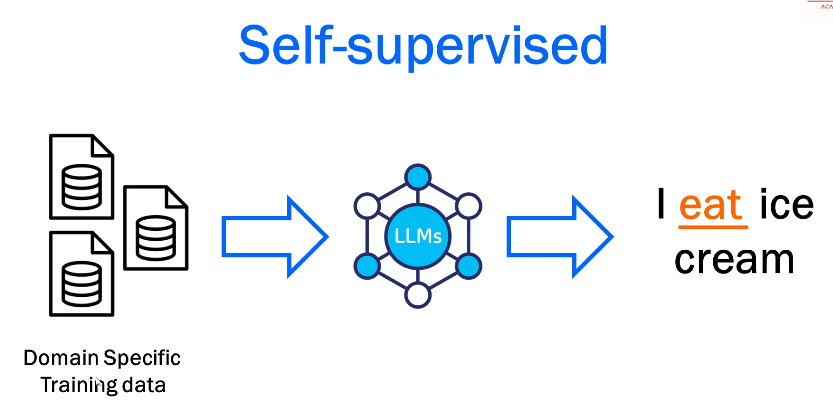

Fine-tuning a LLM is the process of adapting a pre-trained model to perform specific tasks or to cater to a particular domain more effectively.

why do we do it? While LLMs like ChatGPT excel at providing comprehensive answers to general questions, their knowledge is based on broad pre-training. To generate information for specific tasks or utilize proprietary data from your organization, further training is necessary. This process, called fine-tuning, allows the model to adapt and learn from your specific dataset, enabling it to generate more targeted and relevant outputs.

Think of it like this: we're taking a general-purpose language model and tailoring it for a specific job. This process, called fine-tuning, allows us to achieve better results with a focused dataset.

For example, a language model fine-tuned on medical data will provide more accurate and relevant answers to medical questions compared to a standard, untrained model. Essentially, fine-tuning helps us leverage the power of pre-trained models while optimizing them for specialized tasks.

Fine tuning is not about

- Fine Tuning is NOT about Creating intelligence from scratch

Example: Imagine you want a language model that can write poems in the style of Shakespeare. Fine-tuning wouldn't involve building a completely new AI. Instead, you'd take an existing language model (like GPT-3) and fine-tune it on a dataset of Shakespeare's works. This way, the model learns the patterns and nuances of Shakespearean writing.

Fine Tuning is NOT about Eliminating data requirement

Example: Let's say you want to fine-tune a model to generate accurate legal summaries. You'll still need a substantial dataset of legal documents to fine-tune it effectively. Fine-tuning simply adapts the model to a specific domain or task; it doesn't eliminate the need for relevant data.

Fine Tuning is NOT a Single universal solution.

Example: A model fine-tuned for medical diagnosis wouldn't be suitable for generating marketing copy. Fine-tuning is tailored to specific tasks, and a model optimized for one task might not perform well in another.

Fine Tuning is NOT a Magical one-time process

Example: You might fine-tune a model on a dataset of customer reviews to analyze sentiment. However, as you get more customer reviews, you'll need to periodically re-fine-tune the model on the updated dataset to maintain its accuracy and relevance.

Usage

Think AI is your team mate or companion who is working with you.

"Human in the loop" refers to the practice of integrating human oversight and intervention in AI decision-making processes to ensure accuracy, fairness, and accountability.